10-4. The Least Squares Regression Line

1. Goodness of Fit of a Straight Line to Data

Once the scatter diagram of the data has been drawn and the model assumptions described in the previous sections at least visually verified (and perhaps the correlation coefficient r computed to quantitatively verify the linear trend), the next step in the analysis is to find the straight line that best fits the data. We will explain how to measure how well a straight line fits a collection of points by examining how well the line y=21x−1 fits the data set

x 2 2 6 8 10 y 0 1 2 3 3

(which will be used as a running example for the next three sections). We will write the equation of this line as y^=21x−1 with an accent on the y to indicate that the y-values computed using this equation are not from the data. We will do this with all lines approximating data sets. The line y^=21x−1 was selected as one that seems to fit the data reasonably well.

The idea for measuring the goodness of fit of a straight line to data is illustrated in Figure 10.6 "Plot of the Five-Point Data and the Line ", in which the graph of the line y^=21x−1 has been superimposed on the scatter plot for the sample data set.

Figure 10.6 Plot of the Five-Point Data and the Line y^=21x−1

To each point in the data set there is associated an “error,” the positive or negative vertical distance from the point to the line: positive if the point is above the line and negative if it is below the line.

The error can be computed as the actual y-value of the point minus the y-value y^ that is “predicted” by inserting the x-value of the data point into the formula for the line:

error at data point (x,y)=(true y)−(predicted y)=y−y^

The computation of the error for each of the five points in the data set is shown in Table 10.1 "The Errors in Fitting Data with a Straight Line".

Table 10.1 The Errors in Fitting Data with a Straight Line

x

y

y^=21x−1

y−y^

(y−y^)2

2

0

0

0

0

2

1

0

1

1

6

2

2

0

0

8

3

3

0

0

10

3

4

−1

1

Σ

-

-

-

0

2

A first thought for a measure of the goodness of fit of the line to the data would be simply to add the errors at every point, but the example shows that this cannot work well in general. The line does not fit the data perfectly (no line can), yet because of cancellation of positive and negative errors the sum of the errors (the fourth column of numbers) is zero.

Instead goodness of fit is measured by the sum of the squares of the errors. Squaring eliminates the minus signs, so no cancellation can occur. For the data and line in Figure 10.6 "Plot of the Five-Point Data and the Line " the sum of the squared errors (the last column of numbers) is 2. This number measures the goodness of fit of the line to the data.

The goodness of fit of a line y^=mx+b to a set of n pairs (x,y) of numbers in a sample is the sum of the squared errors

Σ(y−y^)2

( n terms in the sum, one for each data pair).

2. The Least Squares Regression Line

Given any collection of pairs of numbers (except when all the x -values are the same) and the corresponding scatter diagram, there always exists exactly one straight line that fits the data better than any other, in the sense of minimizing the sum of the squared errors. It is called the least squares regression line. Moreover there are formulas for its slope and y-intercept.

Given a collection of pairs (x,y) of numbers (in which not all the x-values are the same), there is a line y^=β1^x+β0^ that best fits the data in the sense of minimizing the sum of the squared errors. It is called the least squares regression line. Its slope β1^ and y -intercept β0^ are computed using the formulas

β1^=SSxxSSxy and β0^=yˉ−β1^xˉ

where SSxx=Σx2−n1(Σx)2 , SSxy=Σxy−n1(Σx)(Σy)

xˉ is the mean of all the x -values, yˉ is the mean of all the y -values, and n is the number of pairs in the data set.

The equation y^=β1^x+β0^ specifying the least squares regression line is called the least squares regression equation.

Remember from Section 10.3 "Modelling Linear Relationships with Randomness Present" that the line with the equation y^=β1^x+β0^ is called the population regression line. The numbers β1^ and β0^ are statistics that estimate the population parameters β1 and β0.

We will compute the least squares regression line for the five-point data set, then for a more practical example that will be another running example for the introduction of new concepts in this and the next three sections.

EXAMPLE 2. Find the least squares regression line for the five-point data set

x 2 2 6 8 10 y 0 1 2 3 3

and verify that it fits the data better than the line y^=21x−1 considered in Section 10.4.1 "Goodness of Fit of a Straight Line to Data".

[ Solution ]

SSxx=Σx2−n1(Σx)2=208−5(28)2=51.2

SSxy=Σxy−n1(Σx)(Σy)=68−5(28)(9)=17.6

xˉ=nΣx=528=5.6

yˉ=nΣy=59=1.8

so that

β1^=SSxxSSxy=51.217.6=0.34375

β0^=yˉ−β1^xˉ=1.8−(0.34375)(5.6)=−0.125

The least squares regression line for these data is

y^=0.34375x−0.125

The computations for measuring how well it fits the sample data are given in Table 10.2 "The Errors in Fitting Data with the Least Squares Regression Line". The sum of the squared errors is the sum of the numbers in the last column, which is 0.75. It is less than 2, the sum of the squared errors for the fit of the line y^=21x−1 to this data set.

TABLE 10.2 THE ERRORS IN FITTING DATA WITH THE LEAST SQUARES REGRESSION LINE

x

y

y^=0.34375x−0.125

y−y^

(y−y^)2

2

0

0.5625

−0.5625

0.31640625

2

1

0.5625

0.4375

0.19140625

6

2

1.9375

0.0625

0.00390625

8

3

2.6250

0.3750

0.14062500

10

3

3.3125

−0.3125

0.09765625

EXAMPLE 3. Table 10.3 "Data on Age and Value of Used Automobiles of a Specific Make and Model" shows the age in years and the retail value in thousands of dollars of a random sample of ten automobiles of the same make and model.

Construct the scatter diagram.

Compute the linear correlation coefficient r. Interpret its value in the context of the problem.

Compute the least squares regression line. Plot it on the scatter diagram.

Interpret the meaning of the slope of the least squares regression line in the context of the problem.

Suppose a four-year-old automobile of this make and model is selected at random. Use the regression equation to predict its retail value.

Suppose a 20-year-old automobile of this make and model is selected at random. Use the regression equation to predict its retail value. Interpret the result.

Comment on the validity of using the regression equation to predict the price of a brand new automobile of this make and model.

TABLE 10.3 DATA ON AGE AND VALUE OF USED AUTOMOBILES OF A SPECIFIC MAKE AND MODEL

x

2

3

3

3

4

4

5

5

5

6

y

28.7

24.8

26.0

30.5

23.8

24.6

23.8

20.4

21.6

22.1

[ Solution ]

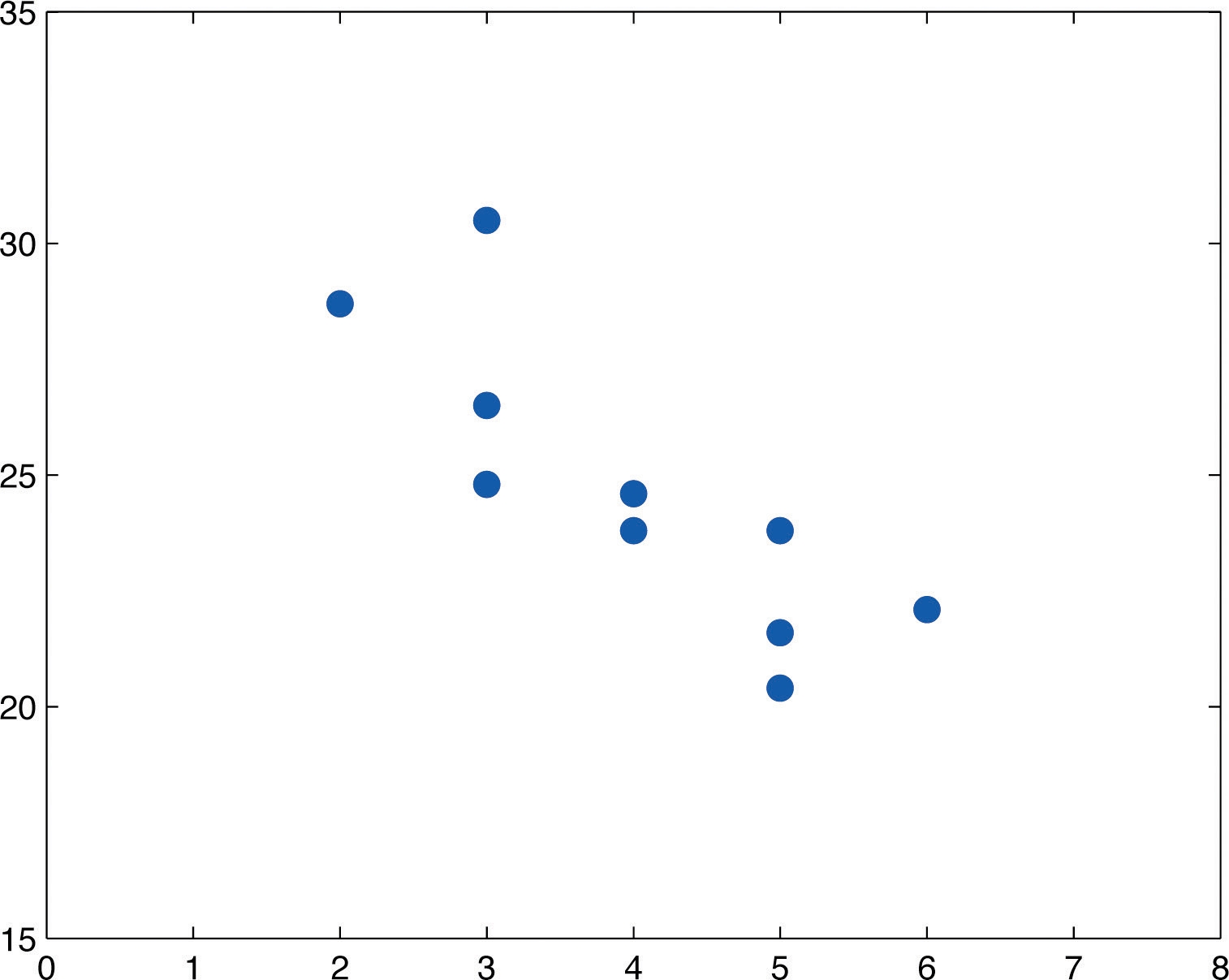

Figure 10.7 Scatter Diagram for Age and Value of Used Automobiles

The scatter diagram is shown in Figure 10.7 "Scatter Diagram for Age and Value of Used Automobiles".

We must first compute SSxx,SSxy,SSyy, which means computing Σx , Σy , Σx2 , Σy2, and Σxy. Using a computing device we obtain Σx=40,Σy=246.3,Σx2=174,Σy2=6154.15,Σxy=956.5 Thus SSxx=Σx2−n1(Σx)2=174−10(40)2=14

SSxy=Σxy−n1(Σx)(Σy)=956.5−10(40)(246.3)=−28.7 SSyy=Σy2−n1(Σy)2=6154.15−10(246.3)2=87.781

so that

r=SSxx⋅SSyySSxy=(14)(87.781)(−28.7)=−0.819 The age and value of this make and model automobile are moderately strongly negatively correlated. As the age increases, the value of the automobile tends to decrease.

Using the values of Σx and Σy computed in part (b), xˉ=nΣx=1040=4

yˉ=nΣy=10246.3=24.63

Thus using the values of SSxxand SSxy from part (b), β1^=SSxxSSxy=14−28.7=−2.05

β0^=yˉ−β1^xˉ=24.63−(−2.05)(4)=32.83

The equation y^=β1^x+β0^ of the least squares regression line for these sample data is

y^=−2.05x+32.83 Figure 10.8 "Scatter Diagram and Regression Line for Age and Value of Used Automobiles" shows the scatter diagram with the graph of the least squares regression line superimposed. Figure 10.8 Scatter Diagram and Regression Line for Age and Value of Used Automobiles

The slope −2.05 means that for each unit increase in x (additional year of age) the average value of this make and model vehicle decreases by about 2.05 units (about $2,050).

Since we know nothing about the automobile other than its age, we assume that it is of about average value and use the average value of all four-year-old vehicles of this make and model as our estimate. The average value is simply the value of y^ obtained when the number 4 is inserted for x in the least squares regression equation:

y^=−2.05x+32.83=−2.05(4)+32.83=24.63

which corresponds to $24,630.

Now we insert x=20 into the least squares regression equation, to obtain

y^=−2.05x+32.83=−2.05(20)+32.83=−8.17

which corresponds to −$8,170. Something is wrong here, since a negative makes no sense. The error arose from applying the regression equation to a value of x not in the range of x -values in the original data, from two to six years.

Applying the regression equation y^=β1^x+β0^ to a value of x outside the range of x -values in the data set is called extrapolation. It is an invalid use of the regression equation and should be avoided.

The price of a brand new vehicle of this make and model is the value of the automobile at age 0. If the value x=0 is inserted into the regression equation the result is always β0^ , the y -intercept, in this case 32.83, which corresponds to $32,830. But this is a case of extrapolation, just as part (f) was, hence this result is invalid, although not obviously so. In the context of the problem, since automobiles tend to lose value much more quickly immediately after they are purchased than they do after they are several years old, the number $32,830 is probably an underestimate of the price of a new automobile of this make and model.

For emphasis we highlight the points raised by parts (f) and (g) of the example.

The process of using the least squares regression equation to estimate the value of y at a value of x that does not lie in the range of the x-values in the data set that was used to form the regression line is called extrapolation. It is an invalid use of the regression equation that can lead to errors, hence should be avoided.

3. The Sum of the Squared Errors (SSE)

In general, in order to measure the goodness of fit of a line to a set of data, we must compute the predicted y-value yˆy^ at every point in the data set, compute each error, square it, and then add up all the squares. In the case of the least squares regression line, however, the line that best fits the data, the sum of the squared errors can be computed directly from the data using the following formula.

The sum of the squared errors for the least squares regression line is denoted by SSE.SSE. It can be computed using the formula

SSE=SSyy−β1^SSxy

EXAMPLE 4. Find the sum of the squared errors SSE for the least squares regression line for the five-point data set

x 2 2 6 8 10 y 0 1 2 3 3

Do so in two ways:

using the definition Σ(y−y^)2 ;

using the formula SSE=SSyy−β1^SSxy.

.

[ Solution ]

The least squares regression line was computed in Note 10.18 "Example 2" and is y^=0.34375x−0.125. SSE was found at the end of that example using the definition Σ(y−y^)2. The computations were tabulated in Table 10.2 "The Errors in Fitting Data with the Least Squares Regression Line". SSE is the sum of the numbers in the last column, which is 0.75.

The numbers SSxy and β1^ were already computed in Note 10.18 "Example 2" in the process of finding the least squares regression line. So was the number Σy=9 . We must compute SSyy. To do so it is necessary to first compute Σy2=0+12+22+32+32=23 Then

SSyy=Σy2−n1(Σy)2=23−5(9)2=6.8 so that SSE=SSyy−β1^SSxy=6.8−(0.34375)(17.6)=0.75

EXAMPLE 5. Find the sum of the squared errors SSE for the least squares regression line for the data set, presented in Table 10.3 "Data on Age and Value of Used Automobiles of a Specific Make and Model", on age and values of used vehicles in Note 10.19 "Example 3".

[ Solution ]

From Note 10.19 "Example 3" we already know that SSxy=−28.7 , β1^=−2.05 , and Σy=246.3 .

To compute SSyy we first compute

Σy2=28.72+24.82+26.02+30.52+23.82+24.62+23.82+20.42 +21.62+22.12=6154.15

Then

SSyy=Σy2−n1(Σy)2=6154−10(246.3)2=87.781

Therefore

SSE=SSyy−β1^SSxy=87.781−(−2.05)(−28.7)=28.946.

Last updated